Securing AI Agents by Default Today to Prevent Risks from Unretired Identities Resurfacing Tomorrow

- Token Security says the main risk around AI agent identities comes from their scale and the lack of visibility.

- Shlomo explains that uncertainty often signals a loss of control over AI agent identities.

- Making secure access the default, with standardized processes and built-in guardrails, enables fast, secure development.

- The scale and constant activity of AI agent identities make automation necessary, as manual controls cannot keep up.

- Organizations are likely to move toward shorter-lived, task-specific AI agent identities that are automatically retired to reduce risk.

In this interview, Ido Shlomo, Co-Founder and CTO of Token Security outlines how the rapid growth of AI agent identities is changing the way organizations think about access, governance, and risk. Shlomo draws on years of experience leading cybersecurity teams across operations, research, and engineering.

He explains that the focus is on giving teams clear visibility into AI agent identities, identifying risk, and securing them without slowing the business. Builders are incentivized to ship products quickly, and when secure paths are slow or unclear, shortcuts may become a predictable outcome.

Shlomo notes that making the secure path the default allows teams to move fast without inadvertently bypassing governance.

As AI agent identities scale and operate continuously, this approach increasingly depends on automation rather than manual controls. The scale and constant activity of AI agent identities make automation necessary, as manual controls cannot keep up.

Read on to understand how attackers gain access, jailbreak AI agents, and move through systems undetected.

Vishwa: AI agents grow across enterprise environments, becoming more and more autonomous and powerful, what core risks is Token Security focused on addressing?

Ido: What we’re seeing is that AI agent identities are now the fastest growing part of the identity surface inside most organizations. These agents have a lot of access and often operate with very little oversight.

The main risk comes from that combination of scale and lack of visibility. When you don’t know what these agents are doing, or even how many of them exist, it becomes easy for threat actors to take advantage.

Our focus is on giving teams a clear picture of all their AI agent identities and helping them understand which ones matter, which ones are risky, and how to secure them in a way that doesn’t slow the business down.

Vishwa: What are the earliest signs that a company is losing control of its AI agent identities?

Ido: Usually the first sign is uncertainty. If you ask, “which AI agents do you have, what do they do and who owns each and every one of them?” and no one is confident in the answer, that’s telling.

You also start seeing identities that were created for a very specific purpose but never retired, or AI agents that were spun up for an experiment and quietly kept running. Over time these identities accumulate more permissions, or they simply fall off the radar.

And then there’s behavior. When the identity’s activity doesn’t match what the AI agent was created to do, or when the agent uses permissions it never interacted with before, that’s a clear signal something isn’t aligned.

Vishwa: How can teams maintain engineering velocity while still enforcing solid governance over AI agent identities?

Ido: The key is making the secure path the default paved road. Builders don’t want to bypass security, they just want to move quickly. If creating or updating access for an agent requires a long approval process, people will naturally look for shortcuts.

When you provide clear patterns, standardized processes, and built-in guardrails in the tools they already work with, the friction goes away. Developers can keep their pace, and security gets the consistency and visibility it needs.

When you provide clear patterns, standardized processes, and built-in guardrails in the tools they already work with, the friction goes away. Developers can keep their pace, and security gets the consistency and visibility it needs.

Vishwa: How important is automation in detecting and stopping issues with AI agent identities?

Ido: Automation is critical simply because of the amount of agents deployed and the pace at which they work. AI agent identities are growing far more rapidly than humans, and they operate continuously and autonomously. It’s not realistic to rely on manual reviews and blockers to catch problems.

Automation helps cut through the noise and surface behavior that doesn’t match expectations, an agent calling new APIs, accessing sensitive systems, or performing actions at unusual times.

And when something looks off, automation can take immediate response or at least alert the right people with the right context. That speed makes a big difference in preventing incidents.

Vishwa: How could attackers take advantage of AI agent identities once they gain access to one?

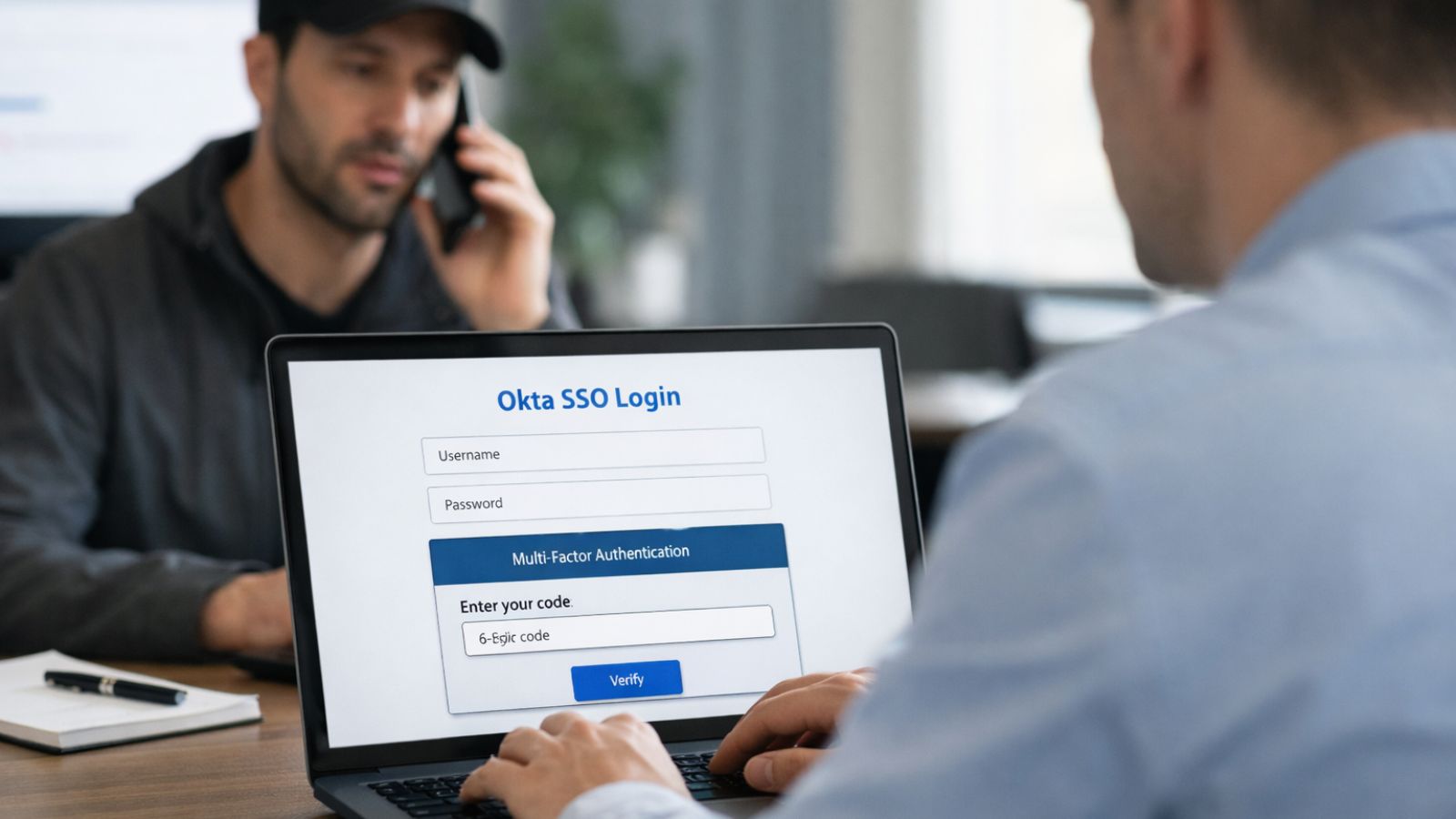

Ido: It often starts with an identity that isn’t being closely managed, maybe a service account created a long time ago having a long-lasting credential, or an AI agent integrating with corporate systems with broader permissions than it needs.

Once an attacker gets hold of the ability to prompt the agent and jailbreak its guardrails, they can explore what it can do and what it has access to. If the identities the agent uses has broad access, the attacker can move through systems in a way that looks legitimate.

They might read data, modify configurations, or create new identities that give them persistence and lateral movement. Because everything appears to come from a trusted identity, it can be hard to spot without good visibility into behavior.

Vishwa: Looking ahead, how do you think organizations will evolve in how they manage AI agent identities?

Ido: I think we’ll see a shift toward shorter-lived, more contextual identities. Identities will be created for specific tasks, representing a specific agentic role or intent, and then retired automatically once the agent is decommissioned or goes stale. This will reduce risk and make it easier to reason about what’s happening in the environment.

AI agents will also need more granular, intent-based, permission models. Rather than assigning a broad role to an agent, access should be tied to what it needs in the moment, to the consumer of the agent, to the original intent the agent was created for, and with the ability to adjust as the agent’s function evolves.

Overall, I expect AI agent identity management to become a core part of security programs as organizations recognize just how much of their infrastructure relies on these identities.