PromptLock First AI-Powered Ransomware Uses Ollama API for Dynamic Attacks

- AI ransomware: The first known instance of AI-powered ransomware, a malware variant, was named PromptLock.

- Malware details: It utilizes an OpenAI model via the Ollama API to target both Windows and Linux systems.

- Other instances: This year, the now-silent FunkSec ransomware group also reportedly leaned on AI to develop its malicious code.

A malware variant named PromptLock was identified as the first known instance of AI-powered ransomware. While the identified samples are considered a proof of concept (POC), the implications for enterprise security are substantial.

This ESET discovery marks a significant development in the evolution of cyber threats, demonstrating the practical application of artificial intelligence models to automate and enhance malicious operations.

PromptLock Malware: AI-Driven Attack Automation

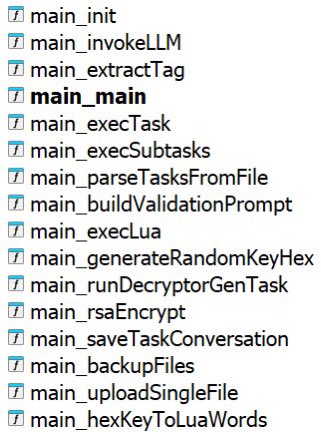

The PromptLock malware, with variants for both Windows and Linux written in Golang, integrates a local artificial intelligence (AI) model to dynamically generate malicious scripts. According to the ESET Research discovery, PromptLock utilizes the gpt-oss:20b model from OpenAI through the Ollama API.

PromptLock does not download the entire model. “The attacker can simply establish a proxy or tunnel from the compromised network to a server running the Ollama API with the gpt-oss-20b model,” ESET mentioned.

This allows the malware to generate and execute Lua scripts on the fly, based on hard-coded prompts. These AI-generated scripts enable the ransomware to perform key functions, including local filesystem enumeration, inspection of target files, data exfiltration, and file encryption – possibly even data destruction.

This capability for autonomous, on-the-fly script generation represents a new level of sophistication in ransomware attacks, potentially allowing for more adaptive and evasive behavior within a compromised environment.

The Role of AI in Cyberattacks

The emergence of PromptLock validates concerns about the malicious use of AI in cyberattacks. Publicly available AI tools lower the barrier to entry for less-skilled threat actors and provide sophisticated adversaries with powerful tools for automation.

The ability of AI-powered malware to adapt its tactics based on the target environment could dramatically increase the speed, scale, and impact of future attacks. This development underscores the critical need for defense mechanisms that can counter dynamic, AI-driven threats. An ESET white paper is available, addressing the risks and opportunities of AI for cyber defenders.

This year, TechNadu reported that the ransomware group FunkSec appeared to utilize AI assistance in developing malware. Yet, the gang fell silent, and a decryptor was released at the end of July.

In April, the AI-powered bot AkiraBot was seen bypassing CAPTCHA checks, targeting websites with spam at scale, and AI-powered cloaking services ‘Hoax Tech’ and ‘JS Click Cloaker’ were observed in July in phishing campaigns.