State-Backed Hackers Use Gemini AI for Cyberattacks Aimed at Cyber Espionage: Google Report

- State-Backed Exploitation: Google's latest cybersecurity report finds that government-backed hackers are using Gemini AI for reconnaissance and code support.

- Attack Tactics: Threat actors used the AI tool to analyze software vulnerabilities, augment phishing capabilities, and generate malicious code.

- Defensive Measures: Google has disrupted these operations by disabling accounts linked to identified threat groups and strengthening AI safety protocols.

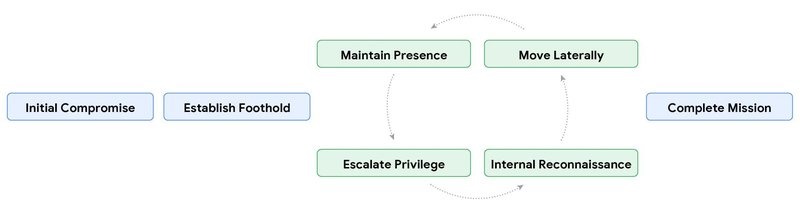

Google has identified multiple instances of state-backed hackers utilizing its Gemini AI platform to enhance their cyberespionage capabilities. The tech giant detailed how sophisticated threat groups are integrating generative AI into their attack workflows, employing it as a productivity tool to accelerate reconnaissance and refine their operational security.

A new Google cybersecurity report reveals that by leveraging large language models (LLMs), these groups can quickly process vast amounts of public data, identify potential targets, and craft more convincing social engineering lures.

AI in Cyber Espionage: Reconnaissance and Coding

Google DeepMind and GTIG have identified an increase in intellectual property theft via attempts of model extraction attacks (MEA) and "distillation attacks." Specific use cases observed include analyzing public software repositories to find exploitable vulnerabilities and generating scripts for data exfiltration.

North Korea-linked threat actor tracked as UNC2970 (TEMP.Hermit, Lazarus Group, Diamond Sleet, Hidden Cobra) was observed targeting and impersonating corporate recruiters, leveraging Gemini to synthesize OSINT and profile high-value targets in the defense sector for planning and reconnaissance.

In some instances, hackers used the AI to debug their own malicious code or translate technical documentation, lowering the barrier to entry for complex operations. The findings suggest that while AI is significantly streamlining the preparatory phases of Gemini AI cyberattacks, enabling threat actors to operate more quickly and efficiently.

New malware families, such as HONESTCUE, are experimenting with Gemini's API to generate code for second-stage malware download and execution.

The report also highlights how threat actors from the Democratic People's Republic of Korea (DPRK), Iran, the People's Republic of China (PRC), and Russia operationalized AI in late 2025:

- APT42 (Iran) conducted reconnaissance and targeted social engineering, and aimed to develop a Python-based Google Maps scraper, a SIM card management system in Rust, and research exploits for the WinRAR flaw CVE-2025-8088.

- APT31 or Judgement Panda (China) impersonated a security researcher to automate the analysis of vulnerabilities and generate targeted testing plans.

- APT41 (China) targeted open-source tool README.md pages and tried to troubleshoot and debug exploit code.

- Temp.HEX or Mustang Panda (China) targeted specific individuals, including from Pakistan, and collected operational and structural data on separatist organizations.

- UNC795 (China) troubleshooted code, conducted research, and developed web shells and scanners for PHP web servers.

Google's Response to AI-Enabled Threats

In response to these findings, Google has taken decisive action to secure its platforms. The company’s threat intelligence teams successfully identified and disabled the accounts linked to these state-sponsored entities.

Google emphasized that it is continuously refining its safety filters to detect and block prompts associated with malicious activity.

“While we have not observed direct attacks on frontier models or generative AI products from advanced persistent threat (APT) actors, we observed and mitigated frequent model extraction attacks from private sector entities all over the world and researchers seeking to clone proprietary logic,” the report said.

Last month, a Google Gemini prompt injection flaw allowed the exfiltration of private data via Calendar invites, and a December report detailed that Google Gemini Enterprise and Vertex AI search enabled attackers to steal Gmail, Docs, and Calendar data.