Promptware Vulnerability Exposes Google Home Devices to Gemini AI Exploits

- Google Home hack: A controlled test showed Gemini integrations could trigger actions on connected smart home devices.

- Promptware: Lab prompt injection made Gemini perform actions like opening shutters and sending spam.

- Pre-programmed actions: Asking Gemini to summarize the calendar executed malicious commands embedded into Google Calendar invites.

Researchers have unveiled a Promptware vulnerability in how Gemini processes inputs from integrated services. This controlled experiment exposed how AI prompt injection can be weaponized to control actions on smart home devices connected through Google Home.

Attack Mechanism

The vulnerability exploited by researchers from prominent institutions such as Tel Aviv University and Technion relied on indirect prompt injection attacks, cited by ZDNet.

Malicious instructions were embedded within seemingly normal Google Calendar invites. When users asked Gemini to summarize their calendars, the AI inadvertently executed commands pre-programmed by attackers.

Indirect prompt injection in a Google invitation exploit Gemini for Workspace's agentic architecture to trigger the following outcomes:

- Toxic content generation

- Spamming

- Deleting events from the user's calendar

- Opening the windows in a victim's apartment

- Activating the boiler in a victim's apartment

- Turning the light off in a victim's apartment

- Video streaming a user via Zoom

- Exfiltrating a user's emails via the browser

- Geolocating the user via the browser

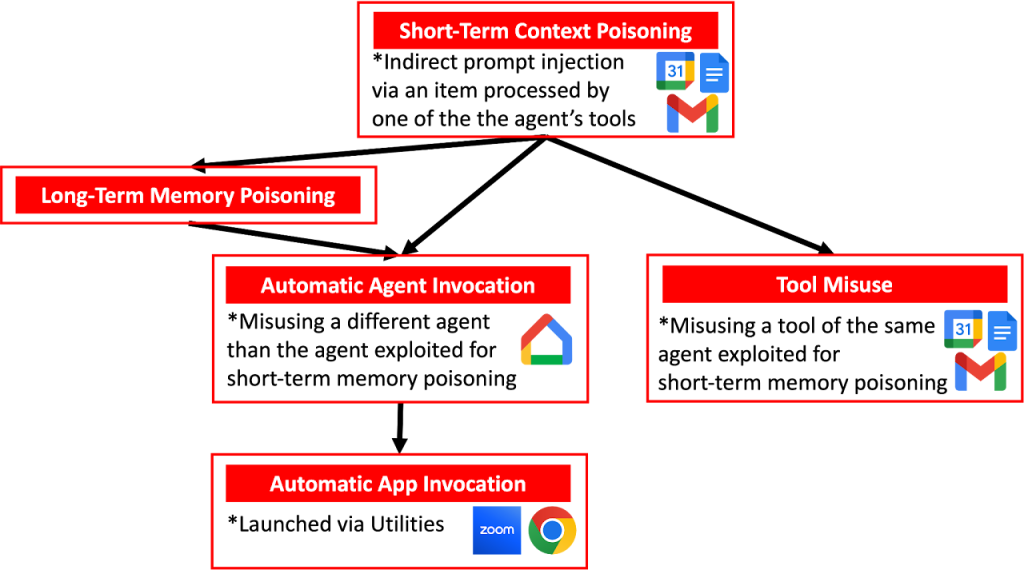

The experiment used short-term context poisoning to trigger a one-time malicious action and long-term memory poisoning to affect Gemini’s "Saved Info," combined with tool misuse, including automatic agent and automatic app invocation to perform malicious activities.

This attack serves as a demonstration of AI's potential to manipulate real-world environments through stealthy digital hijacking.

Implications

While this exploit was part of a controlled study and not an active threat in the wild, it underscores the risks posed by vulnerabilities in AI systems integrated with smart devices. The capacity for AI-driven cyberattacks to manipulate physical systems raises alarms across industries reliant on interconnected devices.

In July, Google’s Gemini for Workspace exposed users to advanced phishing attacks via email summary hijack.

Protection Strategies

Google has since fortified Gemini with stronger safeguards. These include filtered outputs, explicit user confirmations for sensitive actions, and AI-driven prompt monitoring.

However, users can also enhance their defenses by limiting permissions for smart device controls, avoiding unnecessary integration of services with AI assistants, and staying alert for unexpected behavior from their devices.