New AI-Native Threat: Vulnerability in Google Gemini Enterprise and Vertex AI Search Allowed Stealing Gmail, Docs, and Calendar Data

Key Takeaways

- Critical flaw: A zero-click flaw in Google Gemini Enterprise and Vertex AI Search allowed stealing sensitive corporate data from Gmail, Docs, and Calendar.

- Zero-click breach: Attackers exploit the vulnerability using indirect prompt injection without any user interaction beyond a normal search.

- Google deploys fix: Google has deployed updates to mitigate the architectural weakness in its AI systems.

A significant Google AI security flaw has been discovered by security firm Noma Labs, highlighting a new category of AI-native cybersecurity threats. The vulnerability, named GeminiJack, affected Google Gemini Enterprise and Vertex AI Search, allowing attackers to access and exfiltrate corporate data through a novel, zero-click attack vector.

An attacker could embed malicious instructions in a shared Google Docs, email, or calendar invite, which the AI would later execute during a routine employee search.

The Mechanics of the GeminiJack Vulnerability

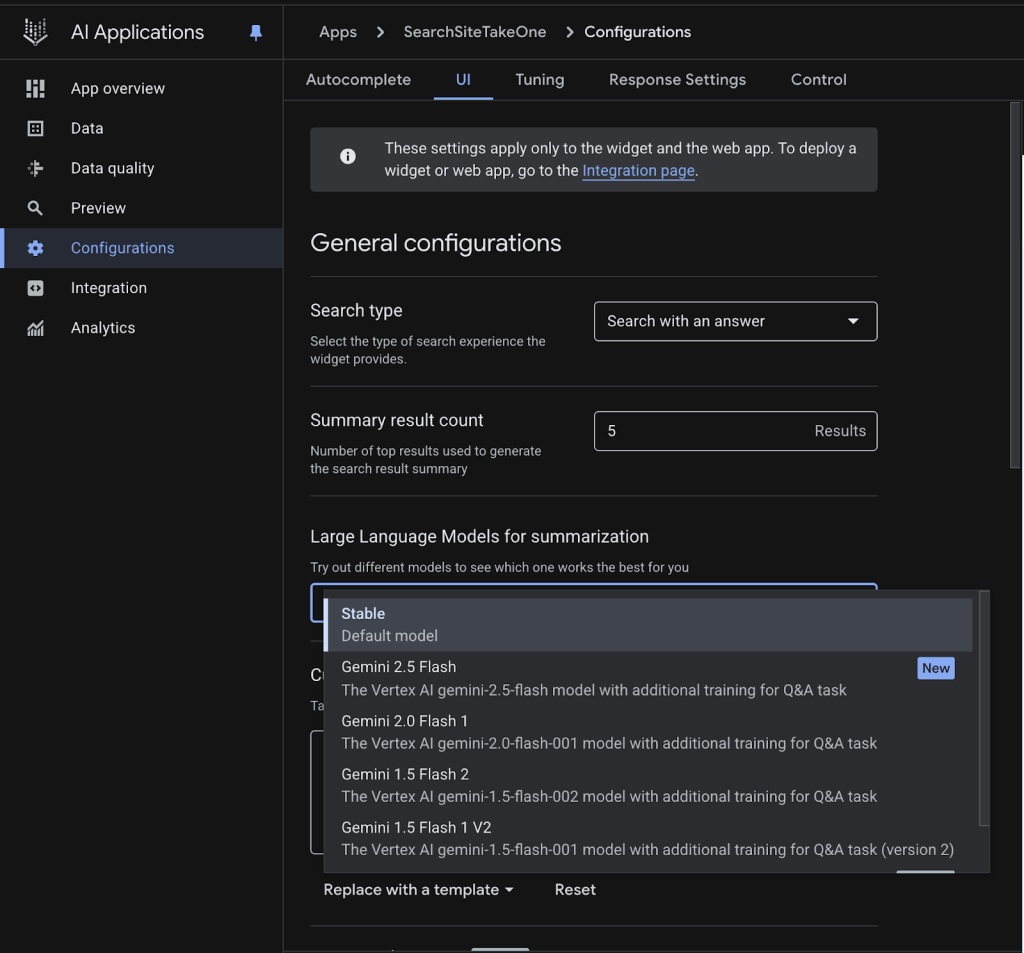

The Noma Security report says the flaw was not a conventional bug but an architectural weakness in how the AI's Retrieval-Augmented Generation (RAG) system processed information, making it susceptible to indirect prompt injection.

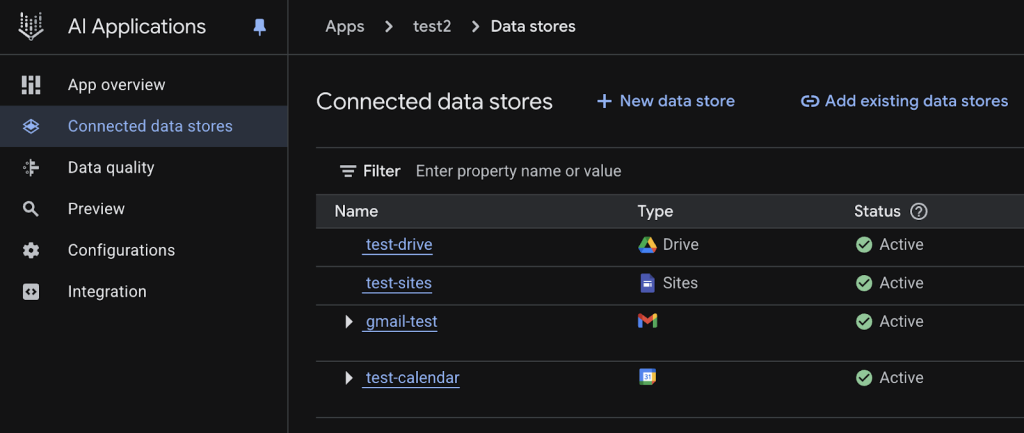

Attackers could poison a document with hidden commands instructing the AI to search for sensitive terms such as "budget," "finance," or "acquisition," and then load the results into an external image URL under the attacker's control.

When an employee performed a relevant search, the AI would retrieve the malicious document, interpret the hidden instructions as legitimate commands, and search across all connected Workspace data sources—including Gmail, Calendar, and Docs. The GeminiJack attack enabled a silent, zero-click data breach, as Gemini treated the embedded instruction as a legitimate command.

The process would appear as normal traffic to traditional security tools. This meant confidential data could be stolen without triggering any alarms.

“Google didn't filter HTML output, which means an embedded image tag triggered a remote call to the attacker's server when loading the image,” said Sasi Levi, Security Research Lead at Noma Security.

“The URL contains the exfiltrated internal data discovered during searches. Maximum payload size wasn't verified; however, we were able to successfully exfiltrate lengthy emails.”

Coordinated Disclosure and Mitigation

Following responsible disclosure practices, Noma Labs reported the vulnerability to Google on May 6, 2025. Google's security team acknowledged the issue and worked with the researchers to implement a fix, which was deployed after a thorough investigation.

Recommendations to organizations:

- Based on risk tolerance, consider limiting or blocking access (for example, reducing the scope of exposed data or enforcing outbound allowlists)

- Review which data sources are connected – both native Google data sources and external integrations may be included.

- In general, agents should be granted access to data and tools only when needed, for a limited duration, based on clear business justification, and with full auditing.

The mitigation addressed the core issue of how the RAG system differentiates between content and instructions. While this specific issue is resolved, GeminiJack serves as a critical warning about the evolving security landscape as AI becomes more deeply integrated with sensitive enterprise data.

In a similar case discovered a few months ago, a vulnerability in Salesforce Agentforce dubbed ForcedLeak exposed CRM data through indirect AI prompt injection.