Meta Disrupts Major Influence Operations Targeting Romania, Azerbaijan, Taiwan via Facebook, TikTok

- Meta discovered covert influence campaigns on Meta services, and even on TikTok, X, and YouTube in Romania.

- Fake personas tried to look like real citizens to integrate manipulative content in their posts, including for Romanian elections.

- They also posted about the Myanmar junta, the Japanese government, and alleged corrupt Taiwanese officials.

Meta has announced the disruption of covert influence campaigns targeting Romania, Azerbaijan, and Taiwan. These operations leveraged fake personas across its platforms in an effort to manipulate public discourse and interfere in local affairs, including elections.

Meta’s latest transparency advisory reported coordinated inauthentic behavior (CIB) by actors behind campaigns that created networks of inauthentic accounts to spread disinformation and sow distrust around upcoming elections and sensitive geopolitical issues.

These operations, which spanned Meta-owned platforms and other social networks, aimed to manipulate public discourse through the deployment of elaborate fake personas and AI-generated content.

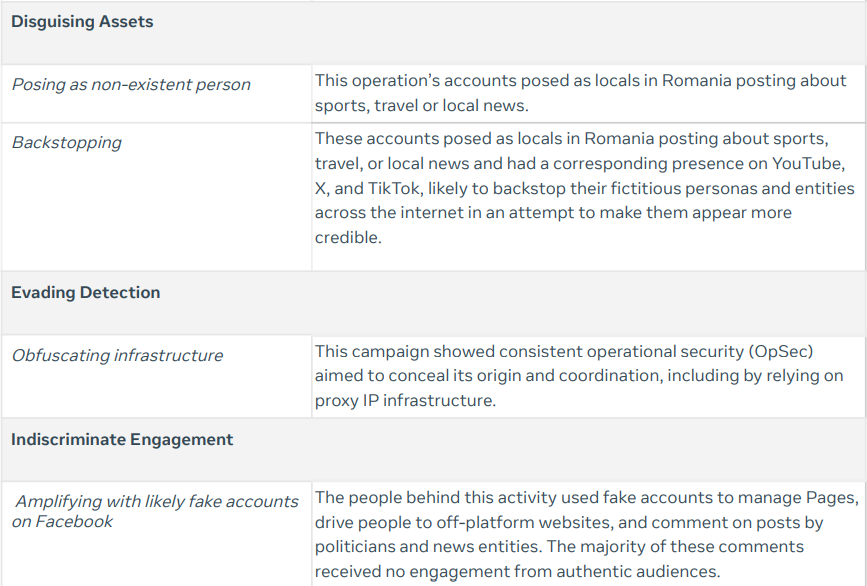

The Romanian campaign proved the most prolific, involving a network of 658 Facebook accounts, 14 Pages, and two Instagram profiles. The threat actors mimicked Romanian residents and engaged in posting local news, sports, and travel narratives via Meta, but also TikTok, X (formerly Twitter), and YouTube.

Their objective was to drive engagement with politically oriented content and steer users to external sites. Despite their operational security measures, including the use of proxy IPs, Meta intervened before these accounts could build significant authentic audiences. Of note, one Page in the campaign amassed approximately 18,300 followers.

A second operation, similar to the activity of the Iranian-based group known as Storm-2035, targeted Azeri-speaking populations in Azerbaijan and Turkey using 17 Facebook accounts, 22 Pages, and 21 Instagram accounts. These assets impersonated female journalists and pro-Palestinian activists, amplifying content with hashtags such as #palestine and #gaza.

Their coverage spanned topics from the Paris Olympics to geopolitical controversies involving Israel and the U.S., employing spam tactics to penetrate trending conversations. This operation is consistent with previous Storm-2035 activities identified by Microsoft, which include attempts to polarize U.S. voter groups on divisive topics.

The Chinese-origin operation was multifaceted, comprising three distinct clusters targeting Myanmar, Taiwan, and Japan. Meta removed 157 Facebook accounts, 19 Pages, one Group, and 17 Instagram profiles linked to the activity.

These entities leveraged AI-generated photos to build convincing fake profiles and maintained an “account farm” to continuously replace removed assets. The campaigns posted in multiple languages on political and military affairs, supporting the Myanmar junta, criticizing Japanese government policies, and alleging corruption among Taiwanese officials.

These findings underscore the persistent threat posed by state-sponsored actors leveraging social platforms to influence narratives, demonstrating both the evolving sophistication and global scale of adversarial online operations.