Insider Risk Report Reveals Widespread Data Loss, Insider Incidents Plague Organizations

- Pervasive incidents: Three-quarters of organizations experienced insider-driven data loss within the last 18 months, most incidents being unintentional.

- Significant impact: Almost half of organizations reported financial losses of $1-$10 million from their most significant insider incident.

- Critical visibility gaps: Almost three-quarters of organizations lack visibility into how users interact with sensitive data across endpoints, cloud, and GenAI platforms.

Insider threats are a prevalent and costly issue for enterprises, with studies saying that 77% of organizations suffered at least one data loss incident driven by an insider in the past 18 months. A significant portion of these incidents, 62%, were attributed to negligent or compromised users rather than malicious intent.

Key Data Security Challenges Identified

The 2025 Insider Risk Report, a study by Fortinet and Cybersecurity Insiders, surveyed 883 IT and security professionals and found that financial consequences are substantial, with 41% of respondents reporting losses between $1 million and $10 million for their most significant event.

A primary challenge highlighted in the report is the lack of visibility. A striking 72% of organizations cannot adequately monitor how users interact with sensitive data across various platforms, including endpoints and cloud applications.

This visibility gap is exacerbated by the rapid adoption of generative AI, as 56% of security leaders express concern about sharing data with tools like ChatGPT, yet only 12% feel prepared to respond.

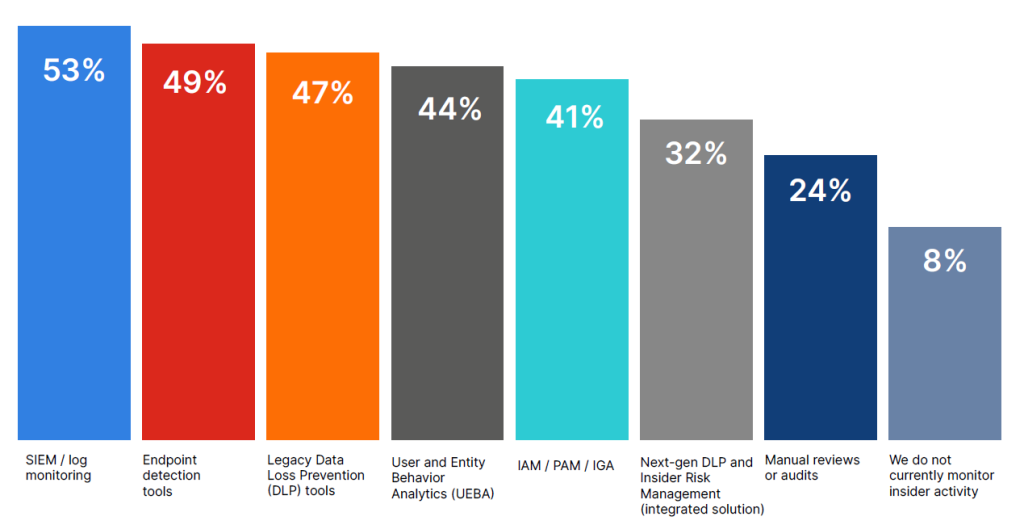

The report also notes that traditional Data Loss Prevention (DLP) tools are falling short, with only 47% of users finding them effective, creating significant data security challenges.

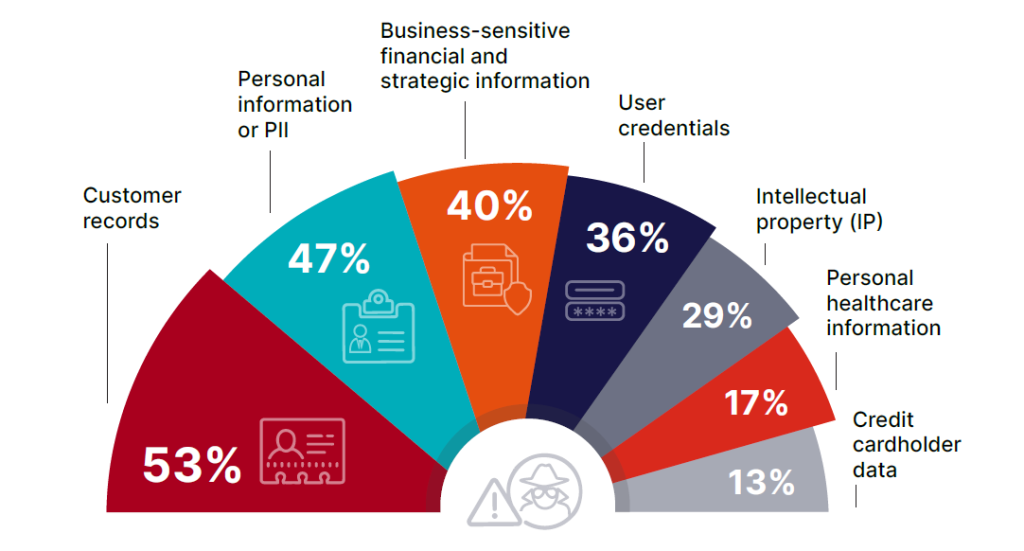

Types of sensitive data that are most often at risk include:

- customer records (53%)

- personally identifiable information (47%)

- business-sensitive plans (40%)

- user credentials (36%)

- intellectual property (29%)

“I have often seen company employees enter business secrets into large language models - without knowing that this data could possibly be output by another user,” Prof. Dr. Dennis Kenji Kipker, the Research Director at “cyberintelligence.institute” and Board Advisor of Nord Security, told TechNadu.

Jason Soroko, Senior Fellow at Sectigo, emphasized that employees, contractors, or partners may exploit vulnerabilities stemming from complex IT environments, hybrid work models, or the adoption of advanced tools like GenAI, either intentionally or unintentionally.

Shift Towards Behavioral Analytics and Modern Solutions

In response to these challenges, the report indicates a strategic shift in how organizations approach insider risk. There is a growing demand for next-generation platforms that integrate data protection with insider risk management.

Sixty-six percent of security leaders are now prioritizing real-time behavioral analytics to interpret user intent and detect deviations from normal activity before a breach occurs.

Furthermore, 52% cite control over shadow IT and unsanctioned SaaS applications as a critical priority, signaling a move away from reactive enforcement toward more adaptive, context-aware security strategies.

Security Experts Advise

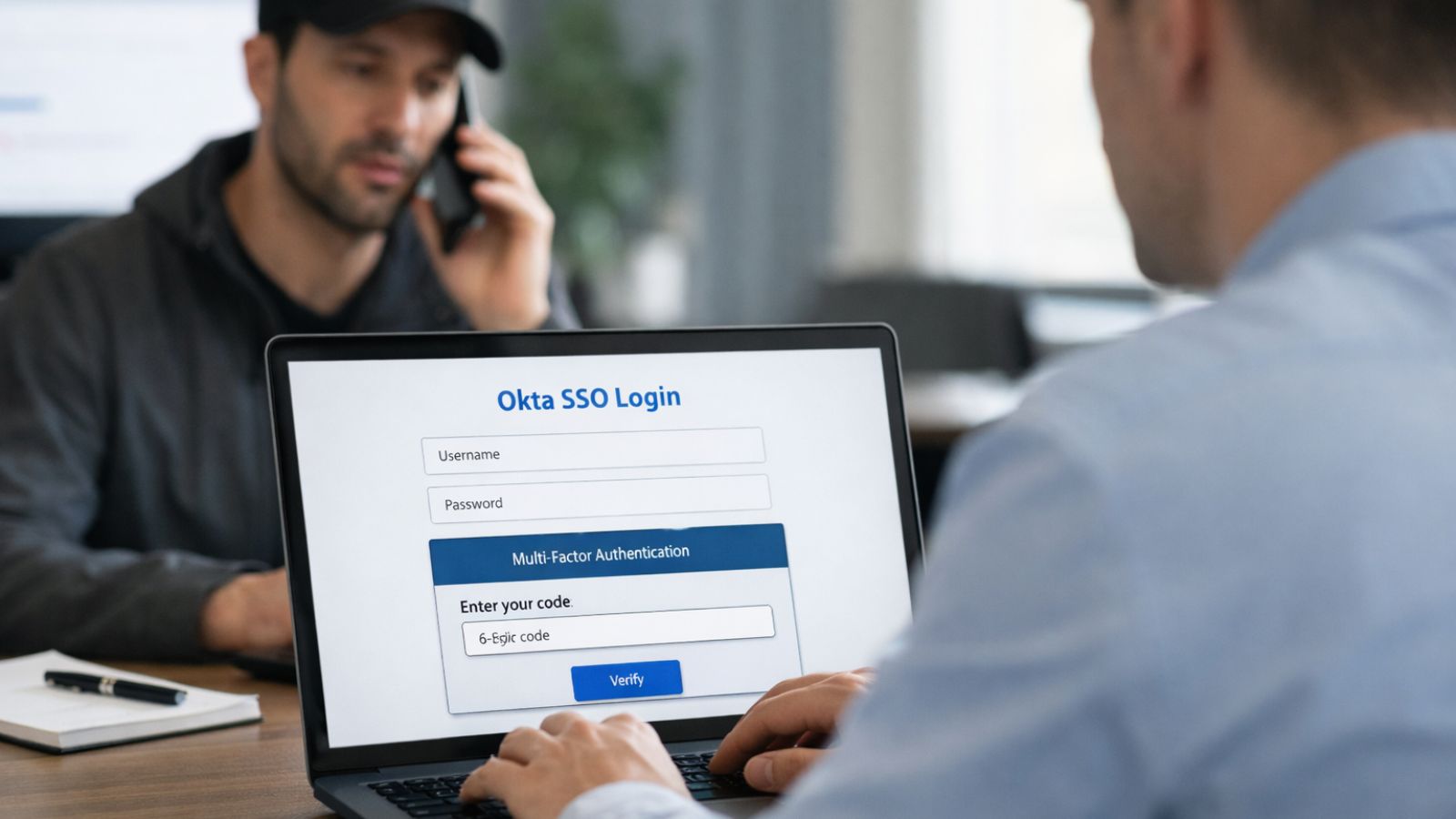

Matthieu Chan Tsin, Senior Vice President, Resiliency Services at Cowbell, said companies must integrate a comprehensive strategy that combines technological tools, strong internal policies, and continuous employee monitoring, while Darren Guccione, CEO and Co-Founder at Keeper Security, emphasized implementing zero-trust architecture with least-privilege access.

“Companies are not only required to raise awareness and train their employees, but also to develop and enforce clear AI guidelines,” Kipker told TechNadu in an interview.

Dr. Margaret Cunningham, Vice President of Security & AI Strategy at Darktrace, highlights the fact that employees forwarding files to personal accounts, bypassing controls to meet deadlines, or uploading sensitive data into unsanctioned AI tools “are normalized behaviors that, at scale, create significant organizational risk.”

AI can surface subtle deviations that humans and static controls would miss by continuously learning the “patterns of life” across individuals, teams, and systems, Cunningham says. It can detect changes in engagement, anomalous access behaviors, or unusual tool usage in real time, often long before these signals escalate into damage.

Chad Cragle, Chief Information Security Officer at Deepwatch, says that what makes insider risk so dangerous is the fact that it doesn’t just bypass your defenses, but “it operates from within them or, as the insider sees it, already behind enemy lines” because an insider is the defense.

Cragle stated that effective detection involves correlating signals across systems, establishing behavioral baselines, highlighting anomalies in context, spotting “that midnight data transfer connected to an unusual login location,” the use of an unapproved tool, and linking these clues quickly enough to intervene before damage occurs.

“The challenge is finding the right balance: staying vigilant without turning the workplace into a surveillance state.”