Infostealer Evolves to Target AI Agents, OpenClaw Configurations

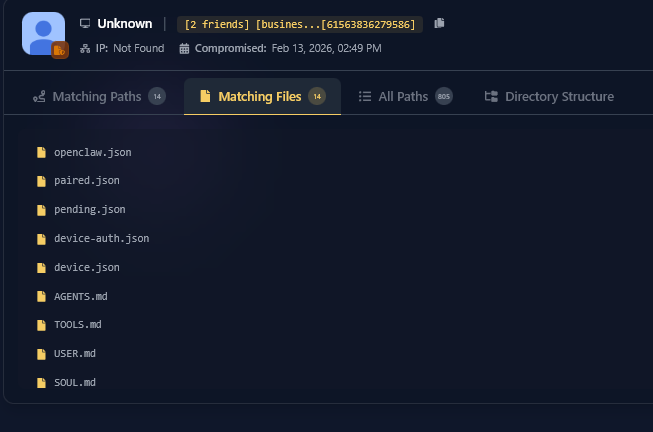

- Expanded Threat: Hudson Rock has identified a real-world incident in which an infostealer successfully exfiltrated a victim's entire OpenClaw configuration environment.

- Total Compromise: The stolen data includes the user's sensitive information, granting the attacker full control over the AI agent.

- Contextual Theft: Beyond credentials, the malware harvested "soul" files and memory logs, effectively stealing the digital personality and daily context of the user's AI assistant.

An active infostealer targets OpenClaw configurations via infections that use a broad file-grabbing routine, which sweeps for specific directory names such as .openclaw, inadvertently capturing the full operational context of the user’s personal AI agent.

This discovery marks a shift from traditional credential theft to the exfiltration of complex AI environments, highlighting growing AI agent security risks as these tools become deeply integrated into professional workflows.

OpenClaw Configuration Theft Exposes Critical Keys

An Infostealers by Hudson Rock analysis revealed that the malware successfully stole critical files essential for the agent's operation. The exfiltrated data included openclaw.json, which contained the victim's redacted email and a high-entropy Gateway Token, allowing potential remote access.

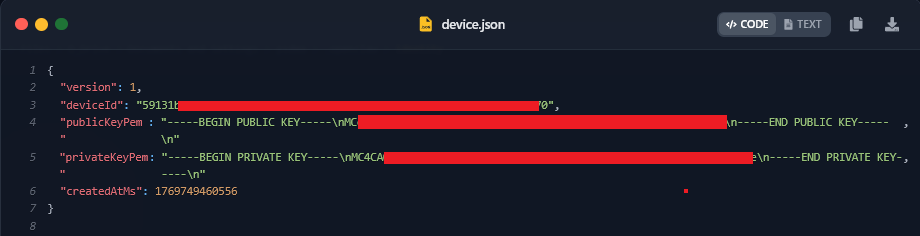

More critically, the OpenClaw configuration theft included device.json, exposing the device's keys used for secure pairing and signing operations within the OpenClaw ecosystem – including privateKeyPem.

With this key, an attacker could impersonate the victim's device, bypassing security checks to access encrypted logs and paired cloud services.

Cybersecurity for AI Tools and Future Implications

The breach extended beyond technical keys to include soul.md and memory files, which dictate the agent's personality and store logs of user activities. This level of access provides attackers with a detailed blueprint of the user's professional and personal life.

As AI agents transition from experimental tools to everyday use, the incentive for malware developers to create specialized "AI-stealer" modules is expected to increase, underscoring the urgent need for robust cybersecurity for AI tools to protect digital identities from comprehensive compromise.

Recently, malicious Chrome extensions were observed exploiting AI popularity to steal user data, and the Evelyn Stealer targeted software developers via VSC extensions last month.