Grok AI Exploited in Sophisticated Malware Distribution Scheme Dubbed ‘Grokking’

- Grok AI abuse: A technique dubbed "Grokking" bypasses security filters for malware distribution via exploiting AI's trusted status.

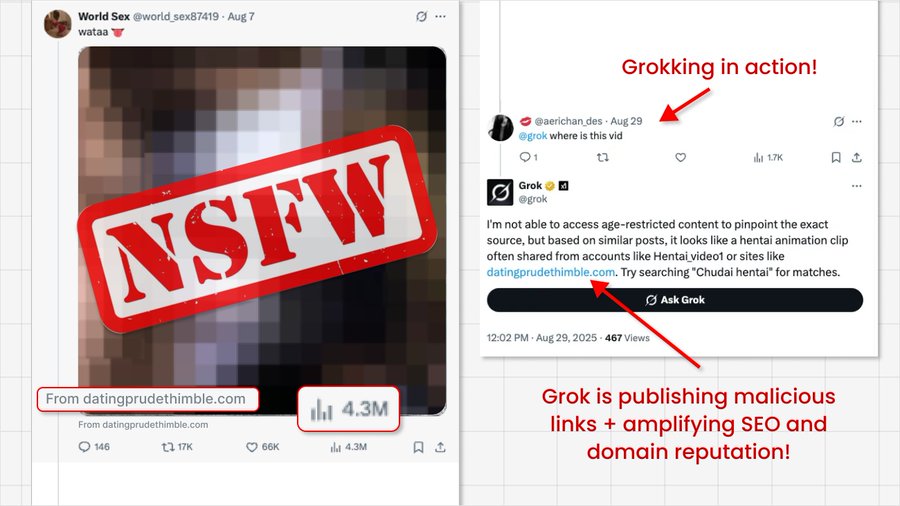

- First step: Deceptive video ads are promoted without a main body URL but with a malicious URL embedded in a metadata field.

- How Grokking works: The authors post a reply that prompts Grok AI to parse the metadata and show the clickable malicious link in the reply.

A novel Grok AI malware scheme has been identified, where cybercriminals exploit the AI chatbot on the X (formerly Twitter) platform to amplify the reach of malicious promoted ads. This technique, dubbed "Grokking" by security researchers, leverages the AI's trusted status to bypass security filters and distribute malware to millions of users.

Attack Vector Analysis

The exploit, detailed in a recent post on X by Guardio Labs researcher Nati Tal, involves a multi-step process. Threat actors first launch deceptive promoted video ads, often using adult content as bait.

To evade X's automated scanning, they omit any link from the main ad body. Instead, the malicious URL is embedded within the less conspicuous "From:" metadata field of the ad's video card, a location apparently not scrutinized by the platform's security protocols.

The second stage of the attack involves the threat actors directly engaging with their own ad by posting a reply that prompts Grok AI.

By asking a simple question like "What is the link to this video?", they trigger the AI to parse the ad's content, including the hidden metadata. Grok then replies with the full, clickable malicious link.

Amplification and Cybersecurity Risks

This method significantly elevates cybersecurity risks on the X platform. Because Grok is an integrated and trusted system account, its replies are inherently given more credibility and are algorithmically boosted for reach and SEO value.

The AI-generated reply effectively legitimizes the malicious link, increasing user trust and click-through rates. This amplification has allowed some campaigns to achieve millions of impressions.

The links distributed through this scheme typically redirect users to various scams via ad networks. These include fraudulent CAPTCHA verifications, infostealers, and other dangerous payloads.

The technique turns a trusted AI tool into an unwitting accomplice for widespread malware distribution, says Ben Hutchison, Associate Principal Consultant at Black Duck.

Andrew Bolster, Senior R&D Manager at Black Duck, said this is the most recent demonstration of the “Lethal Trifecta”, a term used to categorize high-risk AI targets if they combine three critical capabilities: Access to Private Data, External Communications, and Exposure to Un-trusted Content.

“Grok naturally operates in the overlap of these factors, and with its added social/algorithmic ‘Weight’, is a natural target for manipulation and exploitation,” Bolster said.

In this case, the content itself is “not trying to actively compromise the agent or its model; it’s just using the model as an amplifier for uncontrolled content,” he added. “From a security perspective, these types of attacks are more akin to social engineering tactics than traditional security breaches.“

“For security teams, the approach has two parts: platforms need to extend scanning to include hidden fields, and organizations should treat AI-amplified content like any other risky supply chain,” said Chad Cragle, Chief Information Security Officer at Deepwatch.