ChatGPT Conversations May Be Reviewed and Sent to Police If Deemed ‘Dangerous’

- Chat privacy: OpenAI has confirmed that some conversations with ChatGPT may be reported to the police.

- Monitoring systems: The service plans to use automated detection algorithms and human reviewer evaluation processes

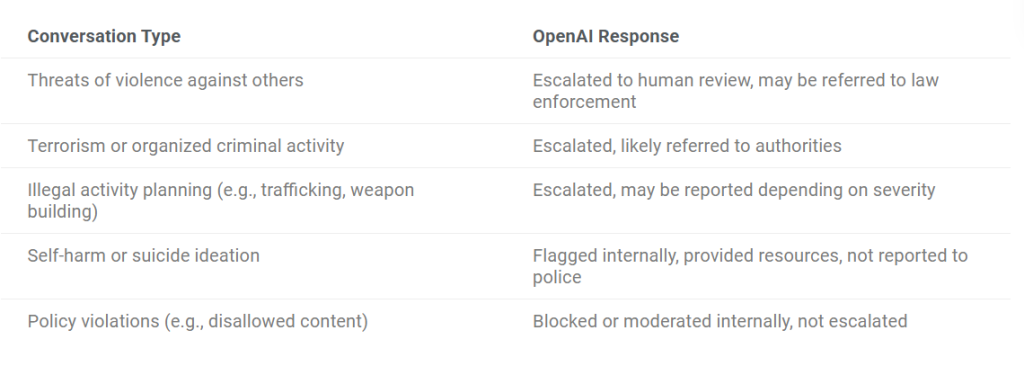

- How they are segmented: Human reviewers evaluate chats flagged as threats, while self-harm or suicide ideation are only flagged internally.

Artificial intelligence (AI) company OpenAI has formally acknowledged that ChatGPT conversations containing violent threats or indicators of imminent harm to others may be escalated to law enforcement authorities following internal review protocols.

Policy Framework and Escalation Procedures

According to OpenAI's official statements, the platform implements dual-layer monitoring systems encompassing automated detection algorithms and human reviewer evaluation processes.

OpenAI ChatGPT conversations undergo systematic scanning for policy violations, with escalation procedures activated based on threat categorization and severity assessment.

The company maintains distinct handling protocols for different content categories. Conversations involving threats of violence against others, terrorism-related planning, or organized criminal activities receive escalation to human review teams and potential referral to external law enforcement agencies.

The statement highlights that conversations where users are planning to harm others are sent to “specialized pipelines” to be reviewed by “a small team trained on our usage policies and who are authorized to take action, including banning accounts.” Conversations that human reviewers determine constitute “an imminent threat of serious physical harm to others” may be referred to law enforcement.

Conversely, conversations indicating self-harm or suicide ideation are flagged internally for safety resource provision but explicitly excluded from external reporting to preserve user privacy protections.

“We are currently not referring self-harm cases to law enforcement to respect people’s privacy, given the uniquely private nature of ChatGPT interactions,” said the company.

Technical Implementation Framework

The monitoring infrastructure distinguishes between harm directed toward third parties versus self-directed harm, with ChatGPT police reporting reserved exclusively for threats targeting external individuals or entities. Standard policy violations and prohibited content undergo internal moderation without external escalation.

OpenAI emphasizes that law enforcement referrals represent statistically rare occurrences within their comprehensive content moderation framework, maintaining that most conversations remain private and unseen by human reviewers.

Privacy Implications and Governance Concerns

The announcement has generated substantial discussion regarding AI privacy concerns within the technology sector and privacy advocacy communities. Critics highlight potential surveillance risks and the possibility of mission creep in AI content monitoring systems, expressing concern that awareness of monitoring capabilities may inhibit open user dialogue.

Questions regarding data retention protocols, human reviewer training methodologies, and escalation decision frameworks remain partially unanswered, contributing to uncertainty about implementation specifics and safeguard mechanisms.

Some argue that details regarding human reviewers' training or escalation decision procedures were not disclosed, nor was information provided on flagged conversation access and storage duration.

Industry Implications and Regulatory Alignment

This policy announcement reflects broader industry adaptation to increasing regulatory scrutiny and public safety responsibilities. The implementation addresses AI privacy concerns while establishing operational precedent that may influence other conversational AI platforms to adopt similar monitoring and reporting mechanisms.

The framework demonstrates OpenAI's approach to balancing user privacy expectations with public safety obligations, positioning the organization within evolving AI governance paradigms that prioritize harm prevention while maintaining platform utility and user trust across enterprise and consumer applications.

This announcement comes after a story made the news regarding a man affected by “AI psychosis” who killed his mother in a murder-suicide.

In other news, recent reports outlined PromptLock as the first AI-powered ransomware, and a ChatGPT-5 downgrade attack bypasses security via simple text commands. Also, Elon Musk's xAI filed a lawsuit against a former engineer who now works at OpenAI for Grok trade secret theft allegations.