AI Progress Hits Pause: Security Gaps Force Firms to Rethink Adoption

- Widespread incidents: Three-quarters of organizations have experienced at least one data security incident related to AI use.

- Adoption delays: Data security concerns and inaccurate outputs are the primary reasons organizations are slowing down AI rollouts.

- Governance gaps: Overconfidence is prevalent, as most organizations still struggle with effective data classification and preventing security incidents.

Enterprise AI adoption is being significantly hampered by fundamental data challenges, as a new study found that while generative AI use is becoming widespread, 85.7% of organizations have slowed their rollouts.

The top impediments are inaccurate AI outputs (68.7%) and serious data security in AI concerns (68.5%), resulting in deployment delays of up to a year for over three-quarters of organizations.

Prevalent AI-Related Data Breaches

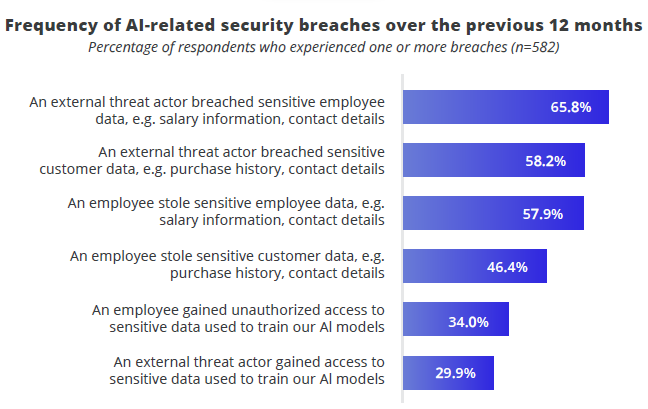

A new study by AvePoint, "The State of AI: Go Beyond the Hype to Navigate Trust, Security and Value," which surveyed 775 global business leaders, underscores the tangible risks of inadequate governance, with a staggering 75.1% of organizations reporting at least one AI-related data security incident in the past 12 months.

These breaches, often involving the oversharing of sensitive employee or customer information, expose the disconnect between perceived and actual security effectiveness.

Despite 90.6% of organizations believing they have a capable information management program, the high rate of security incidents indicates that existing frameworks are not sufficient to manage the complexities introduced by AI.

This has prompted a planned increase in investment in third-party governance and security tools.

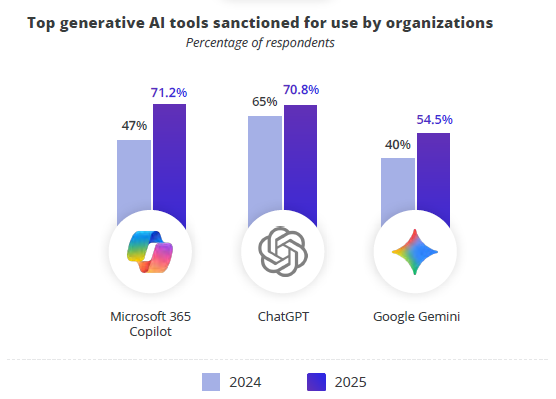

Over the past year, more employees have gained access to approved generative AI tools and most now use them frequently, with Microsoft 365 Copilot and ChatGPT used at similar rates, the report found.

A Call for Stronger AI Governance Strategies

The findings point to a critical need for more robust AI governance strategies. The report shows that while Microsoft 365 Copilot has become the top sanctioned AI tool, the use of unsanctioned "shadow AI" is also growing, widening the governance gap.

To mitigate these AI adoption challenges, AvePoint recommends that organizations prioritize building trust through stronger data hygiene, implementing upstream security controls, and evolving AI Acceptable Use policies. The focus must shift from simply measuring AI adoption to measuring its impact and ensuring a resilient, secure data foundation.

The rapid development of AI technologies undermines organizational credibility, directly threatening brand value and long-term stakeholder trust, said Ishpreet Singh, Chief Information Officer at Black Duck.

Nicole Carignan, Senior Vice President, Security & AI Strategy, and Field CISO at Darktrace, recommends starting with understanding how data is sourced, structured, classified, and secured. “Solid data foundations are essential to ensuring accuracy, accountability, and safety throughout the AI lifecycle.”

Organizations need AI-powered analysis of attack innovations and insights into their own specific weaknesses that are exploitable by external parties, said John Watters, CEO and Managing Partner of iCOUNTER.

Diana Kelley, Chief Information Security Officer at Noma Security, recommends the adoption of an AI Bill of Materials (AIBOM) for effective supply chain security and AI vulnerability management.

“Robust red team and pre-deployment testing remain vital as does runtime monitoring and logging, which round out the approach by providing the visibility to detect and in some cases even block attacks during us,” added Kelley.

Kris Bondi, CEO and Co-Founder of Mimoto, stated that AI agents can be used to help reduce the volume of potential security threats that a security team will need to address themselves.

Security needs to be part of the development lifecycle from day one, not simply an add-on at launch, according to Randolph Barr, Chief Information Security Officer at Cequence Security.

A new Tenable and CSA cloud and AI security report revealed critical gaps, with a third of organizations with AI workloads having already experienced an AI-related data breach.