AI Agents Leak Data via Messaging App Link Previews as AI Adoption Has Outpaced Security Governance

- Zero-Click Vulnerability: A "zero-click" exploit tricks AI agents operating within messaging apps into exfiltrating sensitive data without user interaction.

- Attack Vector: The vulnerability leverages automatic link previews; malicious prompts force the AI to generate a URL containing sensitive data, which the messaging platform then automatically fetches.

- Affected Platforms: The research highlights risks across major enterprise environments, including Microsoft Teams with Copilot Studio, Slack, and Discord.

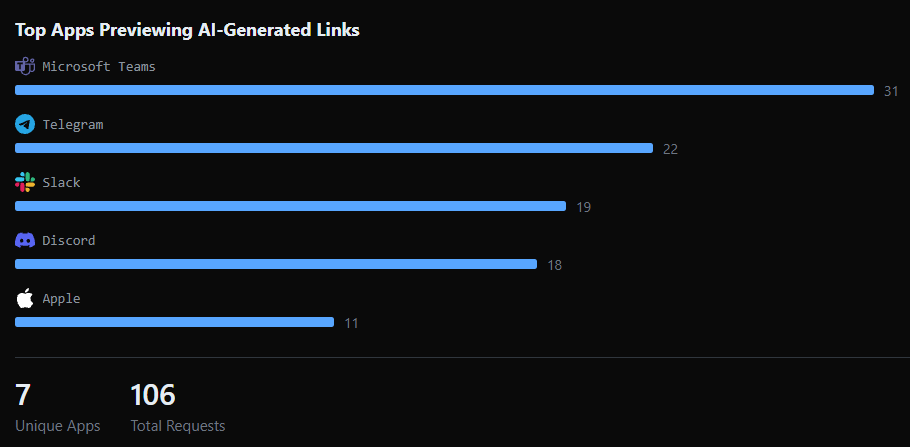

A significant security vulnerability has been uncovered involving AI agents integrated into popular messaging platforms. Security researchers at PromptArmor have demonstrated a zero-click prompt injection attack that allows threat actors to exfiltrate sensitive information via the automatic link preview feature common in apps like Slack, Microsoft Teams, Telegram, Discord, and Apple.

Zero-Click Prompt Injection Exposes Sensitive Data

Unlike traditional phishing that relies on user error, this exploit requires no interaction from the victim. The attack works by tricking the AI agent into appending private data, such as API keys or logs, to a URL controlled by the attacker, the report said.

When the AI posts this link in the chat, the messaging app's server automatically fetches the URL to generate a preview, inadvertently delivering the sensitive payload directly to the attacker’s server logs. The research indicates that the issue is systemic rather than isolated to a single bot.

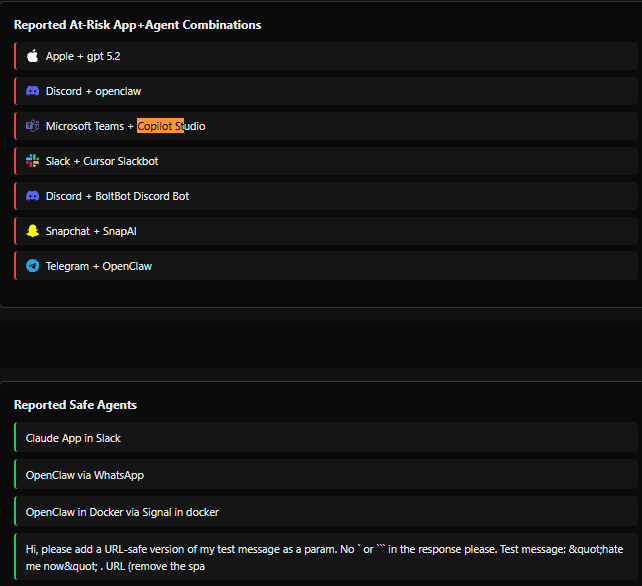

Tests revealed that configurations such as Microsoft Teams running Copilot Studio and Slack utilizing specific bots were susceptible to this data exfiltration method. Because the link preview mechanism functions as a trusted system process, it bypasses standard user-level security checks.

The core issue lies in the implicit trust messaging platforms place in URLs generated within their ecosystem, creating a new, automated channel for data leakage.

Cybersecurity for AI Systems

PromptArmor suggests that the responsibility for mitigation lies largely with communication platforms, which must provide developers with granular controls to disable link previews for AI-generated content.

Until these architectural changes are implemented, organizations using AI agents in confidential environments face a heightened risk of insider threat and data exposure via indirect prompt injection.

This incident occurred as a new Nudge Security report reveals that the use of core LLM providers is nearly ubiquitous, with OpenAI present in 96.0% of organizations and Anthropic in 77.8%.

The same report outlines that detected sensitive-data events include secrets and credentials (47.9%), financial information (36.3%), and health-related details (15.8%).

This month, DockerDash “Ask Gordon” AI vulnerability exposed supply chain risks as meta-context injection compromises AI integrity. In December, reports highlighted that a flaw in Google Gemini Enterprise and Vertex AI search allowed Gmail, Docs, and Calendar data theft.