AI Adoption Spikes in Workspaces in 2025, Cybercriminal Use for Phishing Grows

- AI use increase: Web traffic to AI tool sites grew by 50%, reaching 10.53 billion visits in January 2025.

- Connected cyber threats: The significant spike in web traffic to GenAI sites has a corresponding surge in AI-driven cyber threats.

- Cybercriminal exploitation: Modern enterprise security is currently threatened by phishing kits and the use of shadow AI.

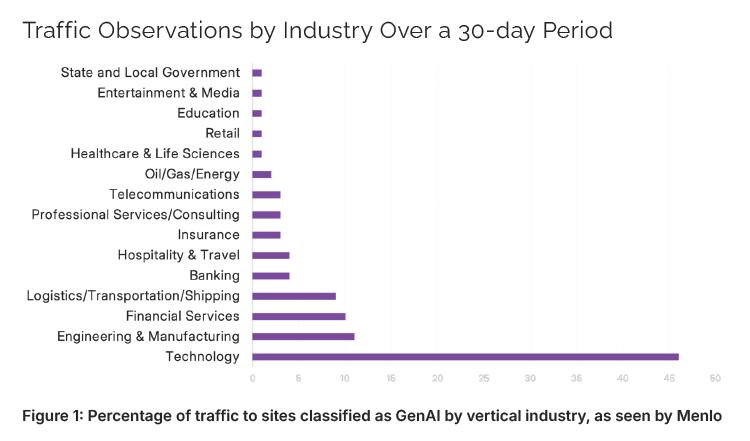

The accelerated adoption of generative AI (GenAI) is driving substantial shifts in the workplace, with web traffic to AI tools surging by 50%. As many as 10.53 billion visits were seen in January 2025, based on telemetry data from hundreds of global organizations.

A Menlo Security 2025 report highlights how GenAI tools, when combined with OSINT and shadow AI, intensify enterprise risk through advanced phishing and data leakage

Generative AI Adoption and Growth

A Menlo Security report stated that up to 80% of generative AI (GenAI) interactions occur through web browsers, as AI becomes increasingly accessible via cross-device integration.

While regions like the Americas lead in absolute traffic, the Asia-Pacific stands out for its rapid adoption of AI, with 75% of surveyed organizations in China leveraging generative AI tools.

The Impact on Enterprise Security

Menlo's report underscores the security risks tied to unregulated AI use, notably the rise of “BYOAI,” or Bring Your Own AI, also known as shadow AI. Employees accessing unsanctioned, free AI tools at work often introduce vulnerabilities, as these platforms may share business data to train foundational models.

Additionally, phenomena like phishing-as-a-service (PhaaS) exploit AI’s capabilities, leading to a 130% increase in zero-hour phishing attacks.

AI-assisted attacks now deploy highly targeted tactics, blending open-source intelligence (OSINT) with generative AI to craft effective spear-phishing campaigns.

Attackers capitalized on the interest in AI to create typosquatting websites for popular AI apps that appear legitimate. Similarly, cybercriminals took over the accounts of browser extensions that provide AI services, including ChatGPT for Google Meet, ChatGPT App, and ChatGPT Quick Access.

Recommendations for Securing AI Usage

The Menlo Security AI report advises enterprises to adopt stringent policies against shadow AI, enforce data loss prevention (DLP) measures, and restrict unauthorized access to AI platforms. Organizations are urged to establish sanctioned AI tools and enforce controls over uploads, downloads, and direct text inputs to safeguard their networks.

Nicole Carignan, Senior Vice President, Security & AI Strategy, and Field CISO at Darktrace, stated that organizations must leverage granular real-time environment visibility AI-powered tools to combat AI-driven attack challenges, as well as integrate machine-driven response, either in autonomous or human-in-the-loop modes, to accelerate security team response.

“The gap in confidence and understanding of AI creates a massive opportunity for AI native security products to be created, which can ease this gap,” said Satyam Sinha, CEO and Co-founder at Acuvity, with Jamie Boote, Associate Principal Security Consultant at Black Duck, recommending organizations to build a strong vision for secure AI use and development.

Dave Gerry, CEO of Bugcrowd, stated that AI-generated exploits and misinformation are already present, so the primary risk is erosion of trust, with the security community needing to focus on model manipulation, model output validation, and adversarial testing of AI systems.

“This is where crowd-led testing matters most,” Gerry added. “We need more incentives for constructive interference—helping the AI ecosystem improve by breaking things safely.”

On July 31, 2025, TechNadu published a report on how GenAI assistants accidentally leak data. In other recent news, an OpenAI ChatGPT privacy breach exposed user chats via the new ‘Make Link Discoverable’ setting.

Exclusive Insights from Menlo Security on GenAI Risks in Organizations

Roslyn Rissler, Senior Cybersecurity Strategist at Menlo Security, told TechNadu that the greatest risk of data loss or leakage is posed by any type of “shadow AI” due to the lack of visibility from enterprise IT and security teams.

This happens as workers often use personal logins (and even unmanaged or personal devices) to access free tiers of powerful GenAI tools and their interactions, contained in browser traffic, may slip past traditional security detection, as DLP tools, endpoint monitors, and firewalls may not track or miss such traffic.

These tools share prompts and responses with their training model, and “information leaving the enterprise in any form could be considered a regulatory breach.” This makes regulated environments, such as finance and healthcare, vulnerable to greater risks due to the sensitive nature of the data they handle.

“In the absence of guidance and education enforced automatically and inline, users will work with the tools that they know,” Rissler mentioned, adding that use of these tools should be flagged for visibility into outgoing traffic to prevent DLP-related issues. Flagging can also provide “an opportunity to educate users of sanctioned GenAI tools and the reasons for their use.”

Yet, these issues can be remedied quickly with a browser-centric approach, by educating employees on and using sanctioned enterprise/business-level GenAI tools, as well as logging attempts to use a non-sanctioned tool and redirecting users to the preferred one. Also, enforce copy/paste or upload/download restrictions, character input limits, and watermarking on GenAI use.

Rissler said Menlo solutions allow blocking traffic categorized as GenAI by default, but warns that categorization engines cannot keep pace, making this a partial solution.

Meanwhile, regional AI-specific regulations are currently being enforced in the EU, China, several U.S. states, and other countries.

Update: Expert insights from Menlo Security and other cybersecurity leaders have been added to expand on the risks and recommendations related to GenAI adoption.