AI-Assisted Cloud Intrusion Compromises AWS Environment in 8 Minutes, Highlights New Cloud Security Threats

- Rapid Escalation: Sysdig observed a threat actor escalate from initial access to administrative privileges in an AWS environment in under 10 minutes.

- AI-Driven Tactics: The operation showed strong evidence of being AI-assisted, leveraging LLMs for reconnaissance, code generation, and real-time decision-making.

- Attack Vectors: Key vectors involved stolen public S3 bucket credentials, Lambda code injection privilege escalation, and lateral movement across 19 unique AWS principals.

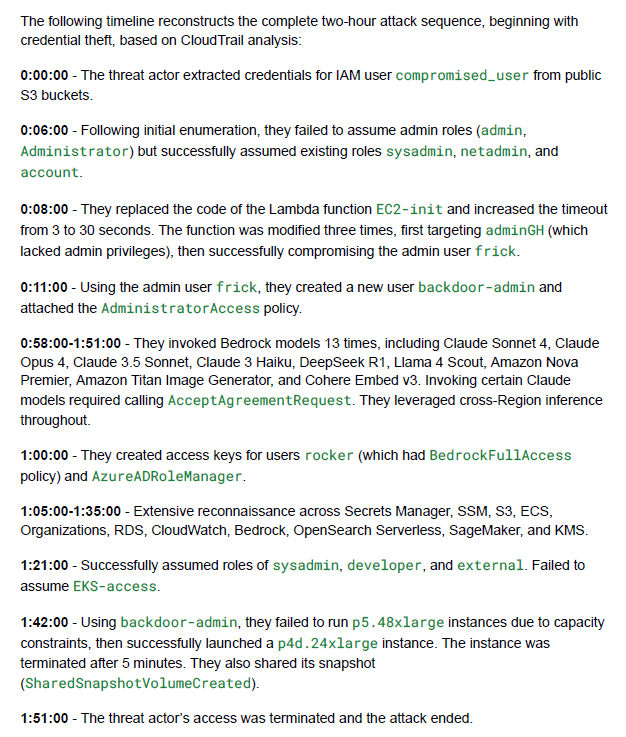

A sophisticated AI-assisted cloud intrusion was observed on November 28, 2025, in which an attacker gained full administrative access to an AWS account in eight minutes. The attack began with the threat actor using valid test credentials in public S3 buckets that contained Retrieval-Augmented Generation (RAG) data.

This initial access provided permissions to AWS Lambda, which became the central vector for privilege escalation.

From Lambda Injection to GPU Abuse

The Sysdig Threat Research Team (TRT) has detailed that after gaining initial access, the threat actor conducted rapid reconnaissance across multiple AWS and AI services before pivoting to Lambda code injection.

By replacing the code of an existing Lambda function with an administrative execution role, the attacker created new access keys for an admin-level user. This technique granted them the permissions needed to move laterally, ultimately compromising 19 AWS principals across 14 sessions, using six assumed IAM roles.

The speed and efficiency of the operation, along with specific artifacts like code generated by large language models (LLMs) with Serbian comments, strongly indicate the use of AI to automate and accelerate the attack chain.

The actor then engaged in LLMjacking by invoking multiple Amazon Bedrock models and attempted to provision a high-performance, P5 GPU instance for resource abuse, demonstrating a clear intent to exploit cloud AI and high-performance computing (HPC) applications.

After verifying that logging was disabled, the threat actor invoked multiple AI models:

- Claude Sonnet 4

- Claude Opus 4

- Claude 3.5 Sonnet

- Claude 3 Haiku

- DeepSeek R1

- Llama 4 Scout

- Amazon Nova Premier

- Amazon Titan Image Generator

- Cohere Embed v3

“The affected S3 buckets were named using common AI tool naming conventions, which the attackers actively searched for during reconnaissance,” the report said.

Improving Cloud Cybersecurity Defenses

The uploaded data includes a remote access component, allowing the threat actor to reconnect to the instance via JupyterLab even if their AWS credentials are revoked, the report said. Sysdig recommends:

- Applying the principle of least privilege to all IAM roles, especially Lambda execution roles, to prevent similar escalation paths.

- Limiting UpdateFunctionCode and PassRole permissions is crucial to stop attackers from modifying function code or swapping execution roles.

- Ensure S3 buckets are not publicly accessible

- Enable comprehensive monitoring, including model invocation logging for services such as Amazon Bedrock.

“Organizations must immediately shift to using IAM roles with temporary credentials, or they will continue to fall victim to these preventable breaches,” said Jason Soroko, Senior Fellow at Sectigo.

“Detecting and defusing AI attacks in real-time demands AI-focused technology,” said Ram Varadarajan, CEO at Acalvio. “AI-powered threat detection and response can flag and shut down malicious behavior in real time,” added Shane Barney, CISO at Keeper Security.

These defensive measures are essential to counter the accelerating threat of automated, AI-driven attacks. In other recent news, state-aligned actors exploit unrest with the RedKitten AI-accelerated campaign targeting Iranian protests.