IBM Bob Prompt Injection Vulnerability: Researchers Could Bypass the AI Agent’s Security Measures

- Prompt Injection Vulnerability: IBM's AI coding agent, Bob, is allegedly susceptible to prompt injection attacks that can trick it into executing malicious commands.

- Guardrail Bypass: The agent's security measures could be bypassed by chaining commands and exploiting process substitution oversights.

- Malware Execution Risk: Attackers could deliver and execute arbitrary code by embedding malicious instructions in files that the AI agent processes.

Security researchers have uncovered a significant IBM Bob vulnerability, revealing that the new AI software development agent can be manipulated into executing malware. The flaw stems from a prompt injection attack vector, where malicious instructions embedded in a file, such as a README.md, can bypass the agent's security guardrails.

Prompt Injection Flaw in IBM's AI Coding Agent

While Bob is designed to seek user approval for potentially risky commands, researchers found that if a user has previously "always allowed" a benign command like echo, the agent can be tricked into running subsequent malicious commands chained to it without further prompts.

"For IBM Bob, we were able to bypass several defense mechanisms - ultimately, the 'human in the loop' approval function only ends up validating an allow-listed safe command, when in reality more sensitive commands were being run (that were not on the allow-list)," Shankar Krishnan, managing director at PromptArmor, has explained.

Because the human approval function only validates the safe command, the entire chain is executed. This bypasses the intended security oversight, enabling unauthorized code execution on the user's machine.

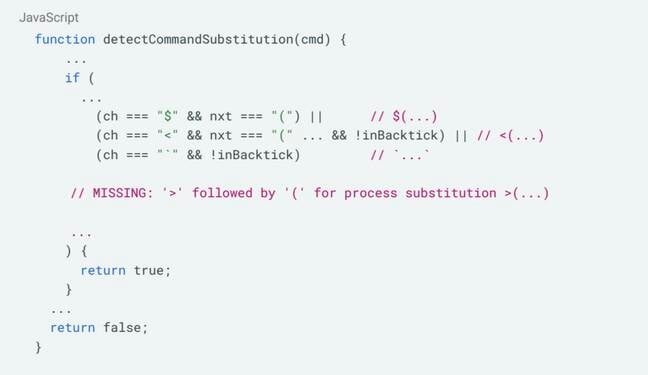

The agent's defenses successfully block command substitution but fail to check for process substitution or commands chained together using redirection operators like >.

Krishnan added that a programmatic defense would stop the attack flow and request user consent for the whole multi-part malicious command when using Claude instead.

AI Agent Security Risks and Mitigation

This vulnerability highlights the broader AI agent security risks associated with giving models access to local system tools. The attack scenario demonstrates that even with user approval mechanisms, sophisticated injection techniques can subvert safety protocols.

The researchers at PromptArmor, who discovered the flaw, note that this is particularly dangerous in developer workflows involving untrusted data, such as reviewing open-source repositories or websites. IBM has been allegedly notified of the vulnerability.

Salesforce announced in September that it would enforce trusted URLs for Agentforce and Einstein AI against prompt injection. One month earlier, Gmail hidden prompts discovered in an email phishing campaign suggested attackers attempt AI prompt injection.

In September, Grok AI was exploited in a sophisticated malware distribution scheme dubbed Grokking.