The Identity and Access Tug-of-War Between AI Agents and Humans

Eric Olden, CEO and Co-Founder of Strata Identity, highlights the risks around AI Agents, and in assuming they are static. Olden brings decades of identity leadership shaped by founding Securant Technologies, guiding Symplified, and leading Oracle’s cloud security.

At Strata, he advances the Identity Fabric model, which adapts governance to agents operating across clouds. Olden warns that agents operate with far more access than anyone intends and their ecosystems often default to implicit trust.

His insight connects legacy challenges with current threats, especially as AI agents gain privilege, and drift in behavior.

Olden explains how the absence of lifecycle governance creates over-privileged agents that quietly accumulate danger.

Read on to know what happens when a single AI Agent acts on behalf of several employees and exposes the ecosystem to unknown security risks.

Vishwa: What single identity risk do you see most frequently as enterprises adopt AI agents across their environments?

Eric: It’s the threat of agents operating with far more access than anyone intends. When teams are in a rush to automate, they give agents broad permissions to “just make it work,” and those privileges rarely get revisited.

You end up with autonomous code running with super-user authority, the kind of access no human employee would ever be approved to have.

The second problem is that most enterprises still lack basic visibility. They can’t say how many agents they have, who created them, what they can access, or what decisions they’re making. Without that baseline, it’s impossible to enforce guardrails or even spot problematic behavior.

That combination, excessive privilege and low visibility, is why AI agents can become a blind spot inside environments that otherwise have strong IAM controls.

This can lead to operational risk and regulatory exposure. You can’t secure what you can’t see, and you can’t govern what you don’t understand.

Vishwa: What is the biggest challenge in unifying human and AI agent identities under one governance framework?

Eric: Humans and agents behave fundamentally differently. A human logs in, stays active for a while, and operates in relatively predictable patterns. Agents don’t. They spin up and down, impersonate users, chain tasks across systems, and operate at machine speed, often without a person in the loop.

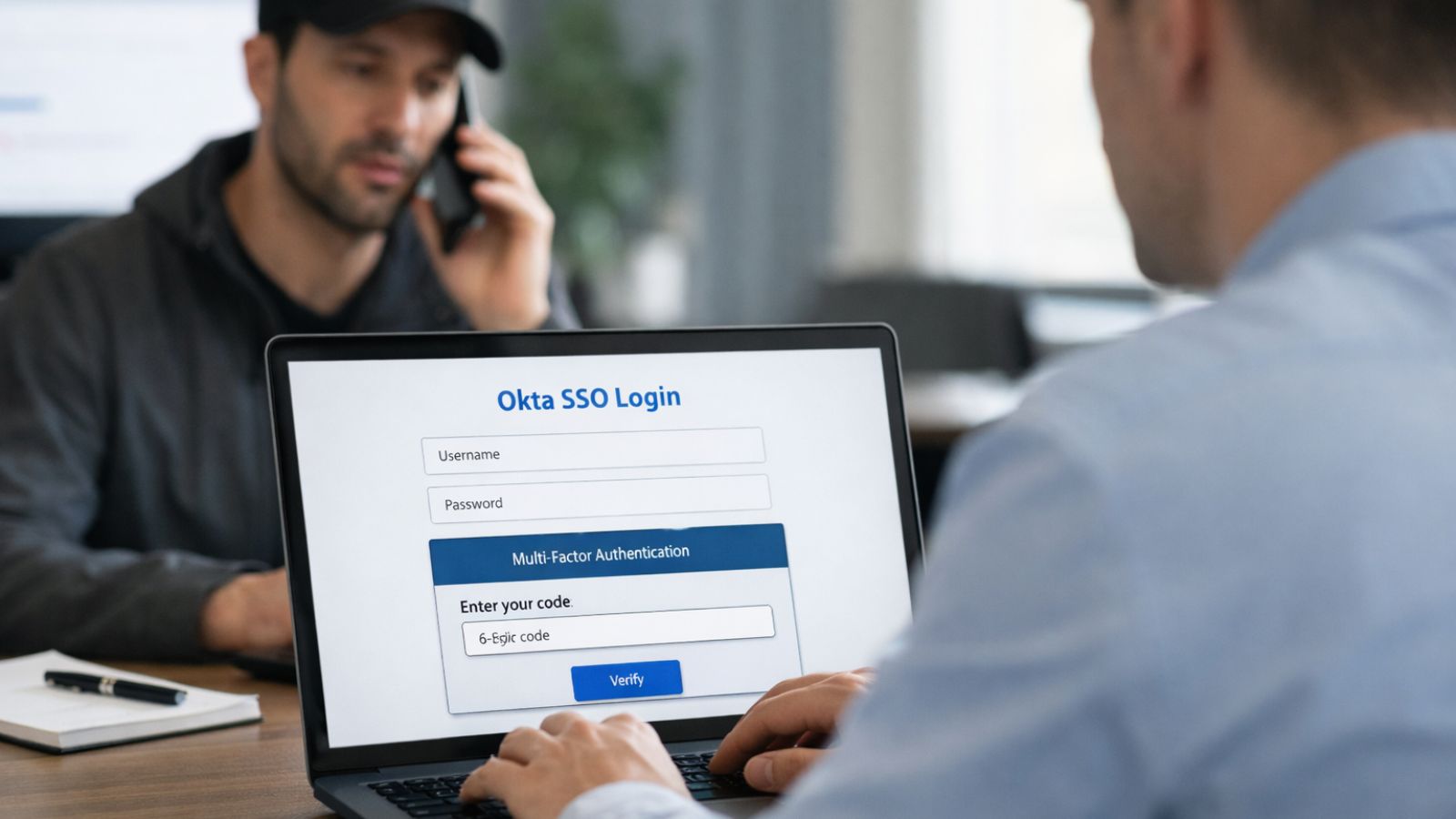

This creates a tug-of-war between two security models. Human IAM has evolved to a Zero Trust approach, where continuous validation is expected. Agent ecosystems often default to the opposite: implicit trust. If an agent spins up with a token, we assume it’s legitimate. That’s “Trust Always,” and it breaks the Zero Trust model entirely.

The real challenge is building a unified governance layer that handles both realities, a framework that captures identity, intent, delegation, and authorization consistently whether the actor is a person at a keyboard or an autonomous workflow making split-second decisions.

Traditional IAM wasn’t built for this fluidity, and when organizations try to force agents into human identity models, that’s where the gaps, and the risks, begin to appear.

Vishwa: How does Strata ensure continuity and trust when an AI agent’s underlying identity provider, registry, or token authority becomes unavailable or compromised?

Eric: The key is not letting any single identity system become a point of failure. If your enforcement happens close to the agent’s runtime, near the actual API calls and actions, then outages or issues in upstream systems don’t immediately weaken your security posture.

You preserve continuity even when the issuing system is unavailable. Short-lived, tightly scoped credentials also limit blast radius.

If an agent’s authority is bound to its specific task, and that authority expires quickly, then an outage or compromise upstream has far less room to cause damage. The system knows what the agent is allowed to do even if it can’t reach the original source of truth.

Orchestration software is an elegant way to allow failover between IDPs and registries should one become unavailable or compromised.

Vishwa: What key reductions or metrics best indicate that an organization has achieved effective governance over AI agent identities?

Eric: One of the clearest early indicators is a reduction in long-lived credentials. When agents are operating with short-lived, intent-scoped permissions, it shows that the organization has started to align access with actual behavior rather than convenience. That alone eliminates a huge category of avoidable risk.

Another strong signal is traceability. If you can answer: Which agent took what action, on whose behalf, and why?, you’ve reached real maturity. End-to-end visibility into agent actions, including delegated decisions, creates accountability and makes security teams far more confident in allowing automation to scale.

Finally, look at the decline of shadow agents. As governance improves, the number of unsanctioned GPTs, scripts, or workflows with lingering access drops significantly. When ownership is assigned, lifecycle processes work, and “ghost” agents disappear, you know the program has turned a corner.

Vishwa: What common mistake do enterprises make when they treat AI agent identity as a one-time setup instead of a continuously governed lifecycle?

Eric: The biggest mistake is assuming agents are static. They’re not. Agents evolve as prompts change, models update, workflows grow, and data sources shift. Their access patterns drift over time, and if no one is watching, they drift into dangerous territory.

Another major challenge comes from the rise of “shared AI agent employees,” where a single agent may act on behalf of different humans and perform different tasks throughout the day.

This problem gets worse when development-time privileges linger. Engineers often grant expansive access early on to get an agent working. But when the agent moves to production, no one revisits the scope.

The agent keeps running with broad, inherited permissions that no longer match its real workload. Over time, the gap between what permissions an agent needs and the permissions it has, introduces risk, especially when that same agent is shared across multiple users or workflows.

Without lifecycle governance, creation, review, right-sizing, credential rotation, and eventual retirement, organizations inevitably accumulate orphaned or over-privileged agents. These become the AI equivalent of forgotten service accounts: quiet, persistent, and risky.

Vishwa: As AI agents proliferate across tools, clouds, and LLM ecosystems, how do you see identity governance evolving, and what role will policy portability and interoperability play in securing them?

Eric: Identity governance is shifting from identifying who is acting to understanding what the actor is trying to do. With humans, identity tells you most of what you need. With agents, intent becomes just as important.

The future of governance will need to evaluate not only identity but purpose, context, and expected behavior.

As agents move across clouds, SaaS tools, and LLM platforms, guardrails must move with them. If policy doesn’t travel with the agent, you create fragmented trust zones where attackers can hide or where automation behaves unpredictably. Portability becomes essential if you want consistency.

Interoperability is the foundation. Agents don’t respect organizational boundaries, so your identity policies can’t be constrained by them either.

The future is a unified agent identity control plane that enforces consistent rules across any identity system, any runtime, any cloud, wherever the agents operate.