AI-Native App Boom Creates Security Blind Spots and Major Security Risks, New Report Finds

Key Takeaways

- Pervasive AI adoption: Two-thirds of new enterprise applications now include AI components, significantly expanding the corporate attack surface.

- Shadow AI proliferation: The same percentage of security practitioners report having no method to identify where LLMs are in use across their organizations.

- Frequent security incidents: Three-quarters of enterprises have already experienced LLM prompt injection and issues from vulnerable LLM code and jailbreaking.

A new report on AI-native application security reveals that the rapid integration of artificial intelligence in enterprise environments is creating critical security blind spots. The report, which surveyed 500 security practitioners, indicates that 63% believe AI-native applications are more susceptible to threats than traditional applications.

AI Adoption Outpaces Enterprise Security

This rush to adopt LLMs and generative AI has outpaced the capabilities of security teams, leaving organizations exposed to a new class of vulnerabilities.

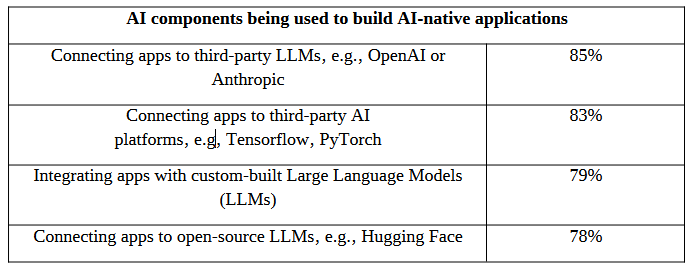

According to the "State of AI-Native Application Security 2025" report from Harness, on average, 61% of new applications are designed with AI components.

The Growing Challenge of Shadow AI

The proliferation of unauthorized AI use, termed "shadow AI," is a primary concern. The research found that 75% of security leaders believe the security issues caused by shadow AI risks will soon eclipse those of shadow IT.

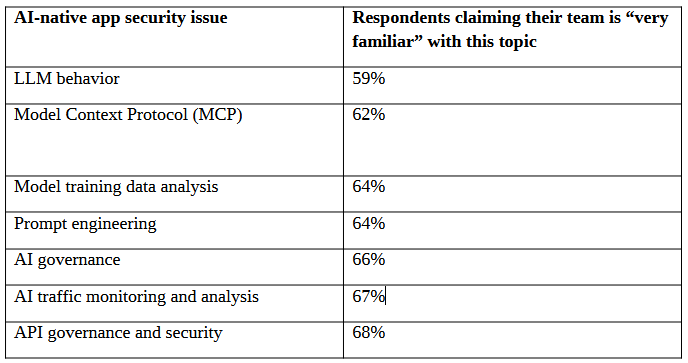

This is compounded by a lack of visibility, as 62% of security teams have no way to track where LLMs are deployed within their infrastructure. This creates a significant blind spot, making it difficult to monitor API traffic, data flows, and access controls for AI components.

- 75% say shadow AI will eclipse the security issues caused by shadow IT

- 74% say AI sprawl will blow API sprawl out of the water when it comes to security risk

- 72% say shadow AI is a gaping chasm in their security posture

- 66% say they are flying blind when it comes to securing AI-native apps

- 62% say they have no way to tell where LLMs are in use across their organization

The report highlights a significant breakdown in collaboration between development and security teams, which exacerbates security risks. A majority of respondents (74%) stated that developers often view security as a blocker to innovation, leading them to bypass established governance processes, which contributes to the rise of shadow AI..

AI Security Recommendations

The report notes that most organizations have already suffered security incidents related to LLM vulnerabilities, including prompt injection (76%), vulnerable code (66%), and jailbreaking (65%).

Furthermore, only 43% of organizations report that their developers consistently build AI-native applications with security integrated from the start. This points to a critical need for implementing DevSecOps for AI:

- Ensuring security is a foundational component of the AI development lifecycle, with clear governance policies and communication between developers and security.

- Discovering all new components as they appear, logging and monitoring them.

- Achieving real-time visibility into AI components and communicating services, focusing especially on API traffic.

- Dynamic application security testing (DAST) to identify security risks prior to production.

- Reduce sensitive data disclosure in AI-native apps in production by inspecting prompts and monitoring responses.

These findings align with other reports that recently highlighted that security gaps force firms to rethink AI adoption, cloud adoption outpaces security readiness, and API security lags as AI adoption accelerates.

The most recent report warned that 65% of the top AI 50 companies leaked sensitive data on GitHub, including API keys and tokens.