GenAI Risks and Data Violations in the Retail Sector; OneDrive, GitHub, and Google Drive Leveraged for Malware Dissemination

- Accelerated GenAI adoption: 95% of retail organizations now use GenAI apps, a significant increase from 73% in the previous year.

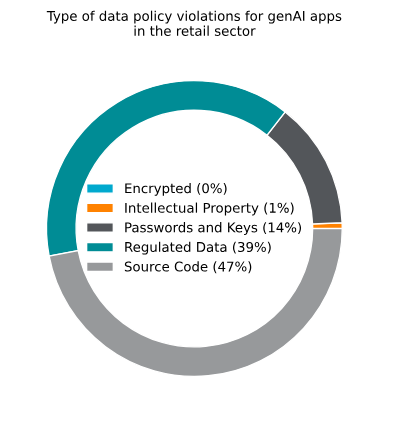

- What is at risk: Source code accounts for 47% of sensitive data exposed to GenAI apps, with regulated data following at 39%.

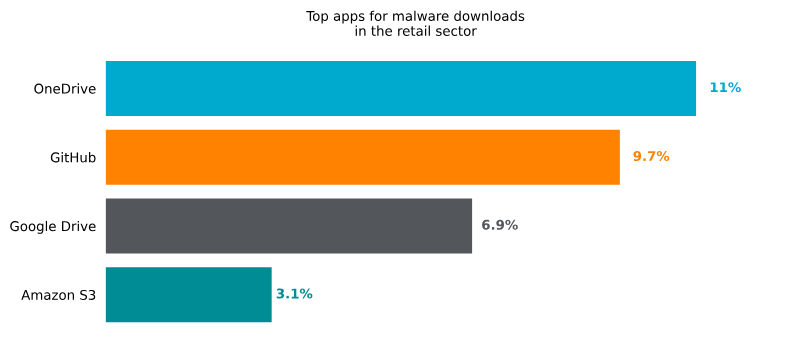

- Malware via trusted apps: Microsoft OneDrive is the top cloud application for malware downloads in the retail sector.

The Netskope Threat Labs Report for Retail 2025 identifies accelerating retail cybersecurity trends, highlighting significant risks associated with the rapid adoption of generative artificial intelligence (AI) and persistent data policy violations.

GenAI Risks in Retail

The analysis reveals that while 95% of retail organizations have embraced GenAI tools like ChatGPT, Microsoft Copilot, and Google Gemini, this integration has introduced new vectors for data exposure. A primary concern is the nature of sensitive information being uploaded to these AI platforms.

Source code represents 47% of all data policy violations in GenAI applications within the retail sector, followed by regulated data at 39%. This indicates that employees are using these tools to process proprietary and confidential business information, often on unapproved or personal accounts, thereby expanding the organizational attack surface.

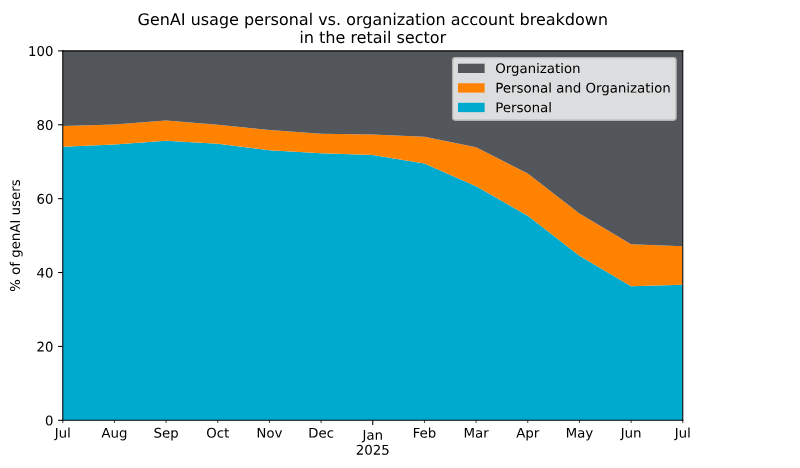

The report notes a shift from personal GenAI accounts to enterprise-sanctioned solutions, but shadow AI and unsanctioned API usage remain considerable challenges.

“GenAI adoption in the retail sector is accelerating, with organisations increasingly using platforms like Azure OpenAI, Amazon Bedrock, and Google Vertex AI. While the use of personal genAI accounts is declining, organisation-approved platforms are gaining traction, reflecting a shift toward more controlled and monitored usage,” said Gianpietro Cutolo, Cloud Threat Researcher at Netskope Threat Labs.

Malware Distribution via Legitimate Services

Furthermore, attackers continue to exploit trusted cloud services to distribute malware. Microsoft OneDrive, GitHub, and Google Drive are the most common vectors, preying on high user adoption rates.

“These massive ecosystems, and the fact that most employees trust what they find in them, make it easier for attackers to hide malware and compromise employees and organisations,” Ray Canzanese, Director of Netskope Threat Labs, told TechNadu.

“Retailers are strengthening data security and monitoring cloud and API activity, helping to reduce exposure of sensitive information such as source code and regulated data. The goal is clear: leverage the benefits of AI innovation while protecting the organisation’s most valuable data assets,” Cutolo asserted.

Mitigation Advice

To mitigate these genAI risks in retail, Netskope recommends that organizations inspect all web and cloud traffic for threats, implement robust data loss prevention (DLP) policies to monitor sensitive data movement, and block access to high-risk applications that serve no legitimate business purpose.

“Cloud-native DLP can prevent employees from uploading sensitive data to unapproved cloud storage instances, limiting the data that can be exfiltrated and broadly reducing the risk associated with unapproved app use,” asserted Canzanese.

“As IT managers, we must no longer block innovation; we must manage it securely. That's why we rely on modern security solutions that give us full transparency and control over sensitive data flows in the age of cloud computing and AI, and that can withstand constantly evolving cyber attacks,” said Stefan Baldus, CISO at HUGO BOSS. “This is the only way we can harness the creative power of AI while ensuring the protection of our brand and customer data."

Security teams trying to detect unapproved deployments of AI agents are advised to start by building capabilities to monitor for programmatic access. “Agents relying on external models will often make non-browser API calls to LLM endpoints, for example, api.openai.com. Detecting this programmatic traffic is the primary way to unmask a shadow agent,” Canzanese said.

“The deployment of tools such as LLM interfaces, like Ollama, or regular access from employees to open source platforms known for hosting AI models and tools, are also strong signals that agents are being deployed, and good starting points for investigation,” added Canzanese.