Embedded Software Faces Critical AI Governance Risks, 2025 Report Finds

- AI integration issues: Managing AI integration within embedded systems in organizations reveals vulnerabilities.

- The discrepancy: Inadequate governance frameworks were seen regarding the adoption of AI in embedded systems.

- Hidden risks: A perception gap between management and engineers is a significant source of hidden risk in many organizations.

Significant vulnerabilities in how organizations manage artificial intelligence (AI) integration within embedded systems were exposed in a study, revealing a dangerous disconnect between widespread AI in embedded systems adoption and inadequate governance frameworks.

Black Duck's comprehensive 2025 Embedded Software Quality and Safety Report surveyed 785 industry professionals across eight countries.

Widespread AI Adoption Outpaces Security Measures

The Black Duck 2025 Embedded Software report findings demonstrate unprecedented AI integration across the embedded software ecosystem.

An overwhelming 89.3% of organizations currently deploy AI-powered coding assistants, while 96.1% incorporate open source AI models directly into their products for critical functions, including data processing (37.3%), computer vision (35.9%), and process automation (34.8%).

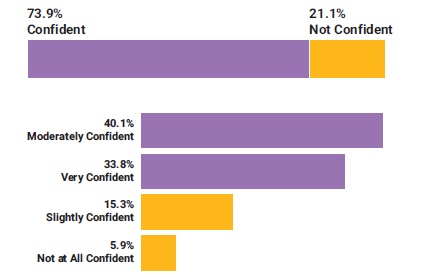

However, this rapid adoption has created substantial risk exposure. Over 21% of organizations lack confidence in their ability to prevent AI-generated code from introducing security vulnerabilities or operational defects.

More concerning, 18% of companies acknowledge that developers utilize AI tools against established company policies, creating shadow AI implementations that bypass organizational oversight entirely.

“AI interprets prompts as executable commands, so a single malformed prompt can reasonably result in wiped systems,” said Diana Kelley, Chief Information Security Officer at Noma Security.

According to recent research from Menlo Security, the adoption of AI has been sufficiently large that it has changed the overall pattern of web traffic. In fact, web traffic to AI tool sites increased from 7 billion visits in February 2024 to 10.53 billion visits in January 2025, representing a 50 percent rise.

While some AI deployments are transitioning to desktop or private applications, Menlo Security estimates that up to 80 percent of GenAI is still accessed via the browser, whether through web-based AI sites or integration into existing web services.

SBOM Requirements Drive Commercial Transformation

Software Bill of Materials (SBOM) compliance has evolved from regulatory checkbox to competitive necessity, with 70.8% of organizations now mandated to produce SBOMs.

Customer and partner requirements (39.4%) have emerged as the primary driver, surpassing industry regulations (31.5%) as the dominant force behind SBOM adoption.

Mayuresh Dani, Security Research Manager at Qualys, said the easiest approach for organizations is to request SBO Ms from their vendors. “Organizations should maintain and audit the existence of exposed ports by their network devices. These should then be mapped to the installed software based on the vendor-provided SBOM,” he said.

Developer Challenges and Perception Gaps

Embedded software trends reveal significant operational challenges. System complexity (18.7%) and tight release timelines (18.1%) represent the most substantial obstacles to eliminating critical defects. Yet, nearly 40% are only “mostly successful” because they “sometimes need to compromise” on quality to hit a deadline.

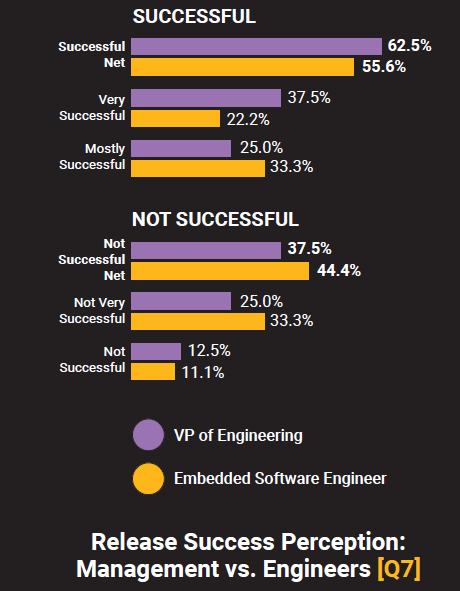

A perception gap exists between management and engineering teams, with 86% of CTOs reporting project success compared to only 56% of hands-on embedded engineers, the report said. “A manager sees a product shipped on time and calls it a win. An engineer sees the shortcuts, the accepted risks, and the technical debt kicked down the road and calls it a compromise. The perception gap is a significant source of hidden risk in many organizations.”

Industry Recommendations

The report highlights the pressing need for comprehensive AI governance frameworks, integrated security tools, and alignment between technical realities and management expectations. Organizations should strike a balance between innovation velocity and risk management to maintain a competitive advantage while ensuring product safety and security.

Guy Feinberg, Growth Product Manager at Oasis Security, says the real risk isn’t AI itself, but managing non-human identities (NHIs) with lesser security controls than human users, adding that AI agents must have “least privilege access, monitoring, and clear policies to prevent abuse,” as security teams need visibility.

Nicole Carignan, SVP, Security & AI Strategy, and Field CISO at Darktrace, said “AI systems are only as reliable as the data they’re built on,” recommending organizations to adopt strong data science principles and understand how data is sourced, structured, classified, and secured before thinking meaningfully about AI governance, which requires deep cross-functional collaboration.

Carignan said organizations should tailor their AI policies to their “unique risk profile, use cases, and regulatory requirements.”